I2R Laboratory

Interdisciplinary Intelligent Research (I2R) Laboratory

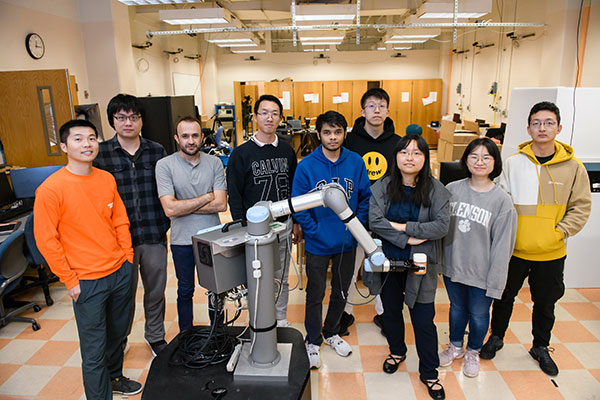

Research themes: Our research focuses on the modeling, learning, and control of autonomy and human-robot interaction systems. Our motivation is to understand the dynamic interaction of robots with their complex and uncertain environments to realize intelligent robot control in real-time and provide system-level performance guarantees such as safety, optimality, quality, and balanced human experience.

We have been investigating human trust in robots, especially computational trust models useful for the control allocation of mobile robots and motion planning of multi-robot systems. We are also working to integrate humans and robots to automate the manufacturing process partially. Our work in this area includes human-robot collaborative assembly in a hybrid manufacturing cell with a humanoid collaborative robot and human-robot cooperative manipulation and transportation with mobile manipulators.

We have been investigating human trust in robots, especially computational trust models useful for the control allocation of mobile robots and motion planning of multi-robot systems. We are also working to integrate humans and robots to automate the manufacturing process partially. Our work in this area includes human-robot collaborative assembly in a hybrid manufacturing cell with a humanoid collaborative robot and human-robot cooperative manipulation and transportation with mobile manipulators.

Our research includes symbolic motion planning for multi-robot systems with provably correct plans, human-centered autonomous vehicle control, and deep reinforcement learning for mobile robots and self-driving cars.

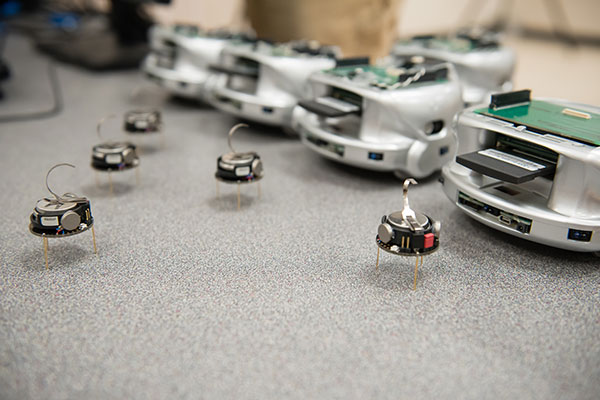

Lab facility/equipment: The Interdisciplinary Intelligent Research (I2R) Laboratory directed by Dr. Yue “Sophie” Wang features an 8m×7m experimental area with reconfigurable drone flying paths, a Rethink Robotics Baxter humanoid manufacturing robot, a UR5 collaborative robot with a Clearpath Ridgeback mobile base, testbeds of heterogeneous mobile robot teams including a Pioneer 3-AT (P3-AT) skid-steer ground mobile robot, a Clearpath Jackal ground mobile robot, a P3-DX nonholonomic wheeled mobile robot, a Pelican quadrotor, six Kephera III nonholonomic wheeled mobile robots, three AR. Drone 2.0 quadrotors, thirty Kilobot swarm robots, a Logitech driving simulator, two Novint Falcon haptic devices, two Logitech joysticks, an Emotiv EEG sensor, and a Phase Space Impulse X2 motion tracking system.

The machine learning, control, and programming needs of the mentioned robotic testbeds are satisfied with several Linux and Windows-based GPUs and PCs. These computers are supplemented by local (in the lab) and campuswide networks. To integrate learning and control solutions for the heterogeneous multi-robot team, Robot Operating System (ROS) is used. This open-source software provides a large stack of drivers for various sensors and robots in addition to standard protocols for communication between them.

Yue Wang

Lab Director

(864) 656-5632

yue6@clemson.edu